Human Evaluation

Sometimes, you may need to evaluate the performance of your models using human judgment. This is where the Human Evaluation feature comes in. It allows you to conduct A/B tests and single model tests to evaluate the performance of your models using human judgment.

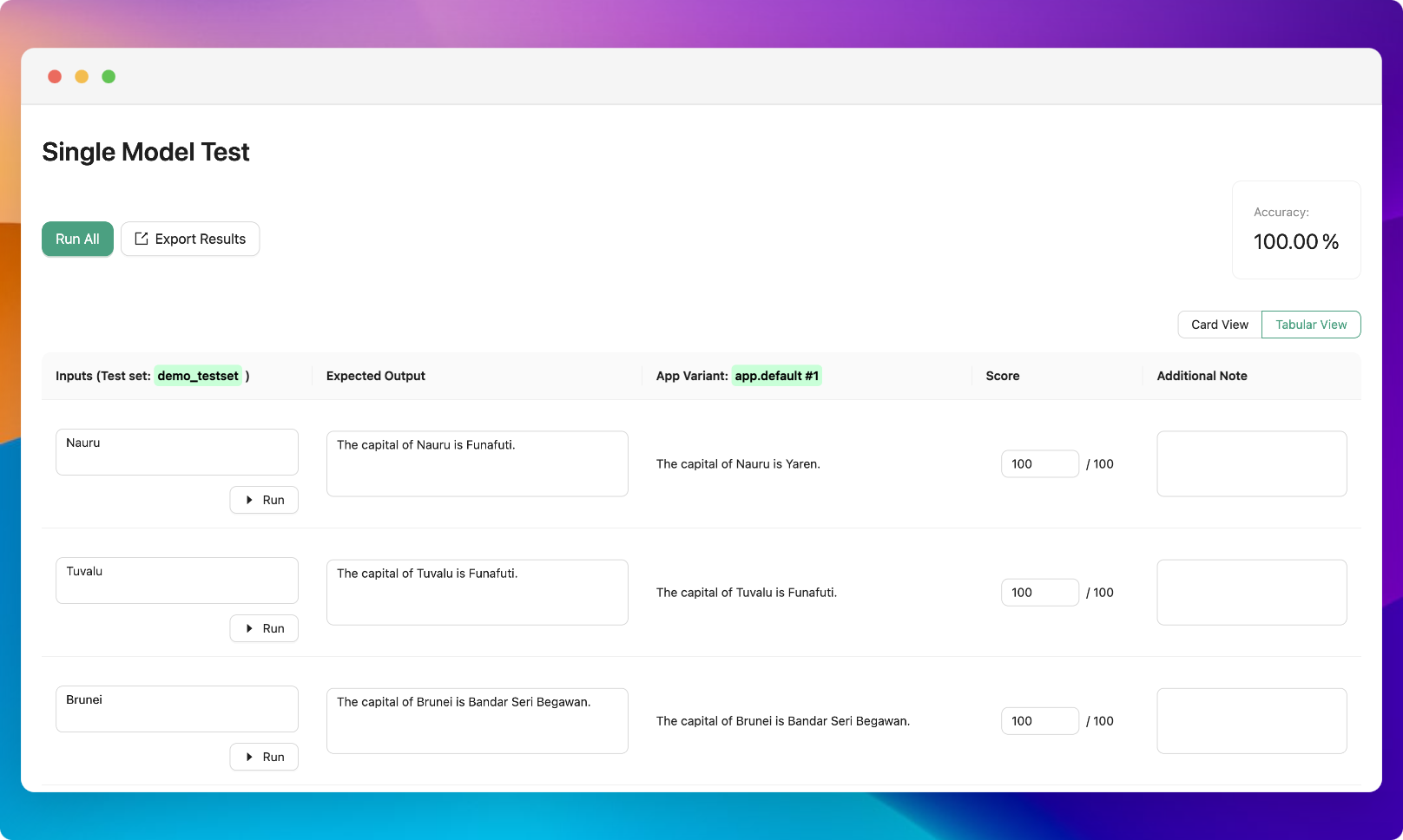

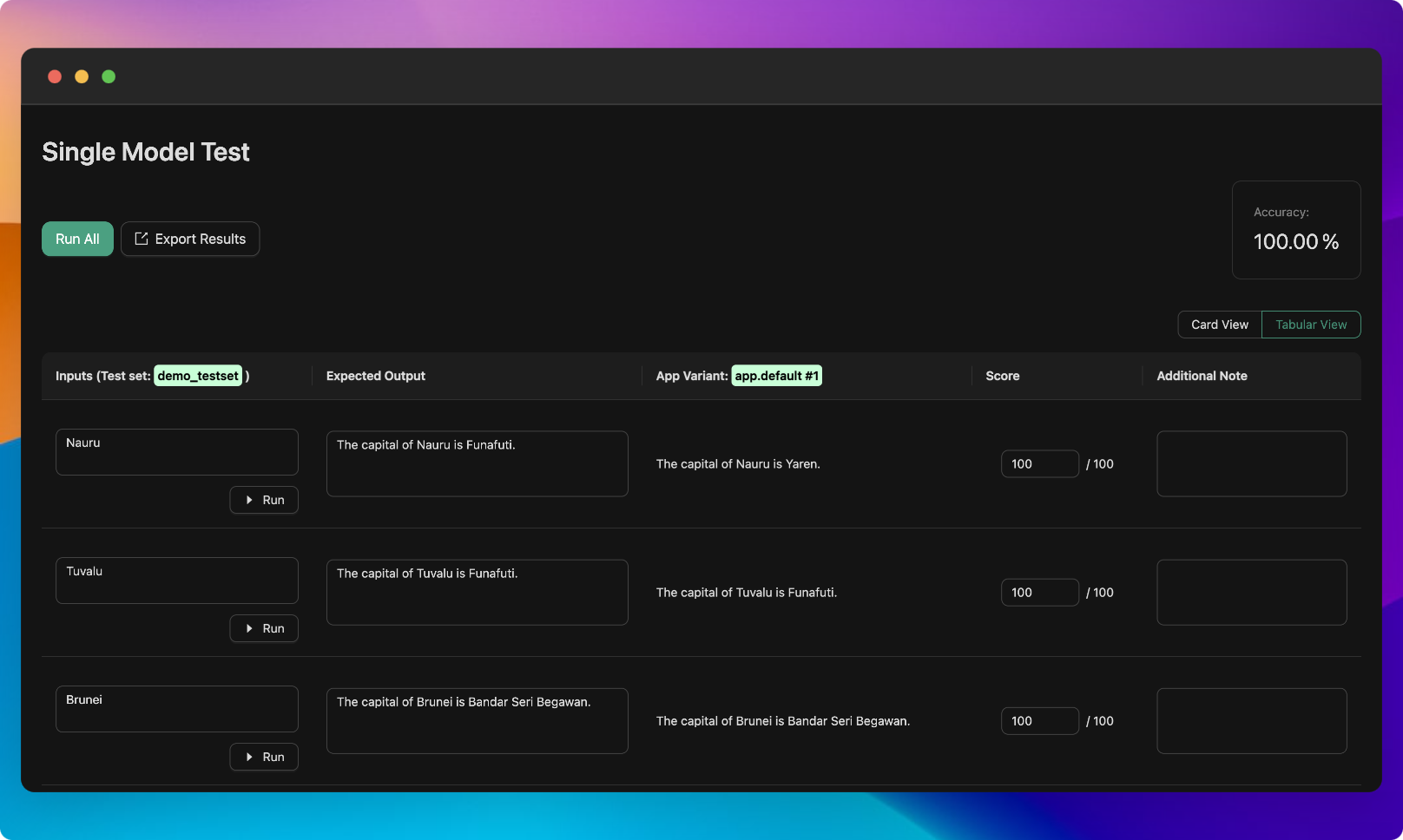

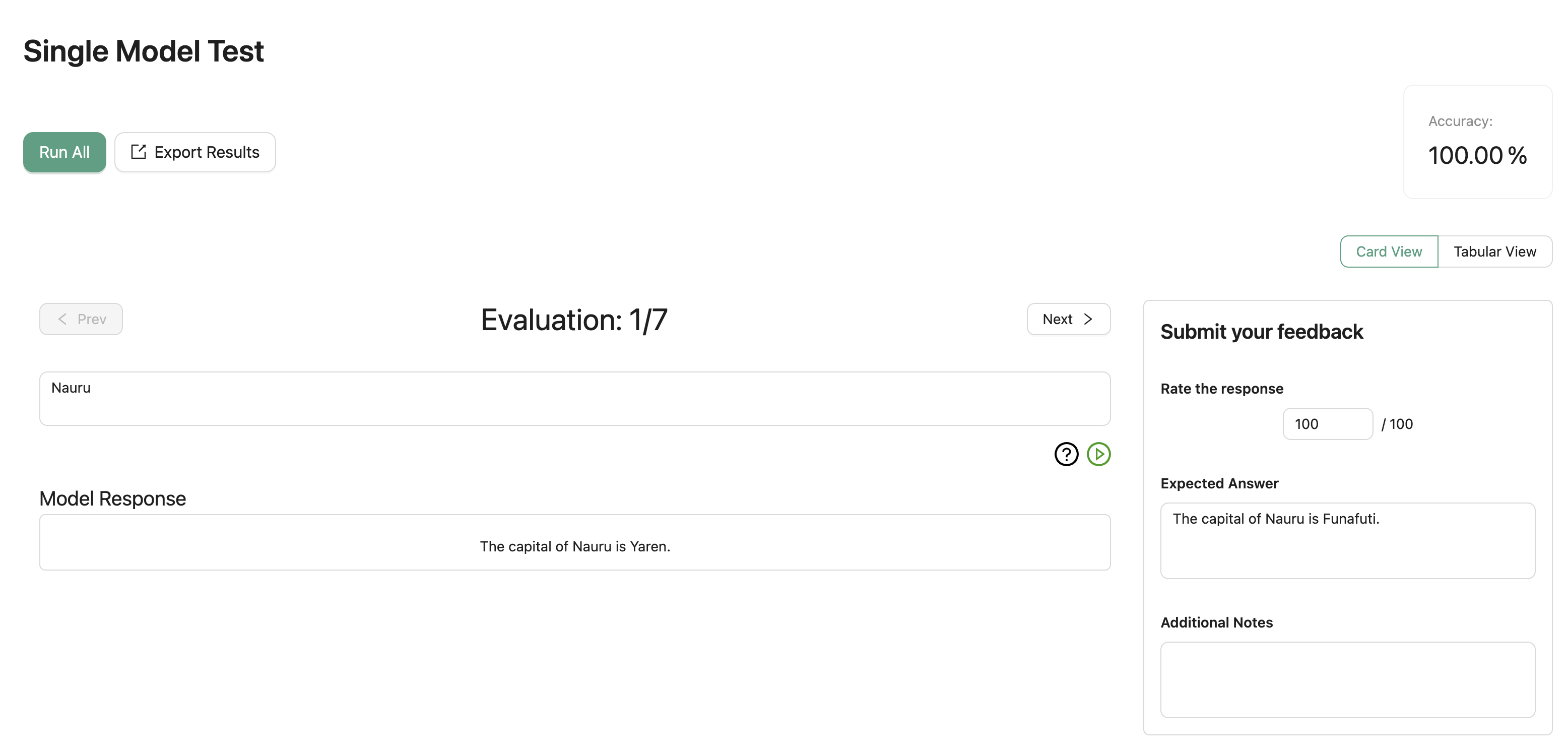

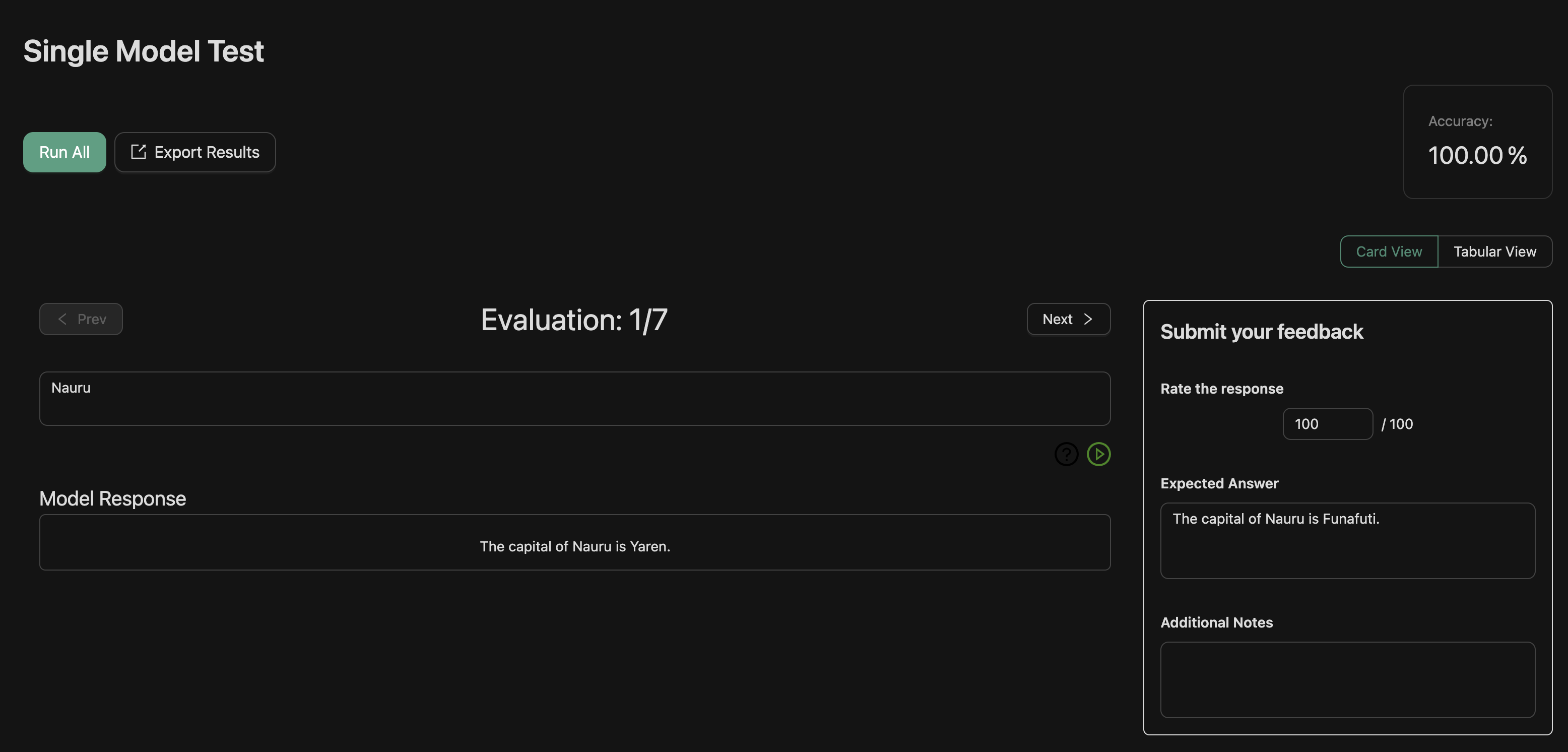

Single Model Evaluation

Single model test allows you to score the performance of a single LLM app manually.

To start a new evaluation with the single model test, you need to:

- Select a variant would you like to evaluate

- Select a testset you want to use for the evaluation

To start a new evaluation with the single model test, follow these steps:

- Select the variant you would like to evaluate.

- Choose the testset you want to use for the evaluation.

Click on the "Start a new evaluation" button to begin the evaluation process. Once the evaluation is initiated, you will be directed to the Single Model Test Evaluation view. Here, you can perform the following actions:

- Score: Enter a numerical score to evaluate the performance of the chosen variant.

- Additional Notes: Add any relevant notes or comments to provide context or details about the evaluation.

- Export Results: Use the "Export Results" functionality to save and export the evaluation results.

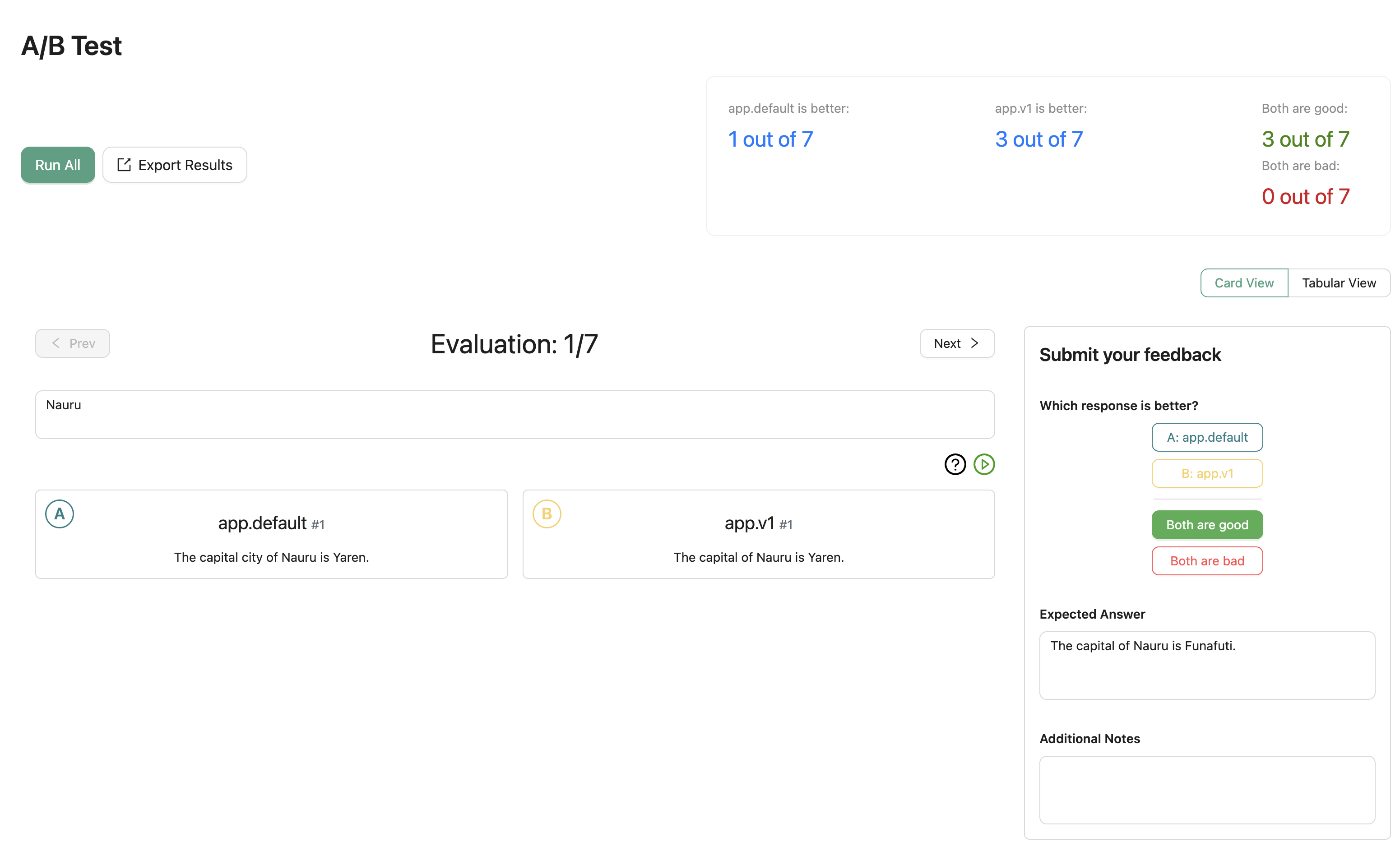

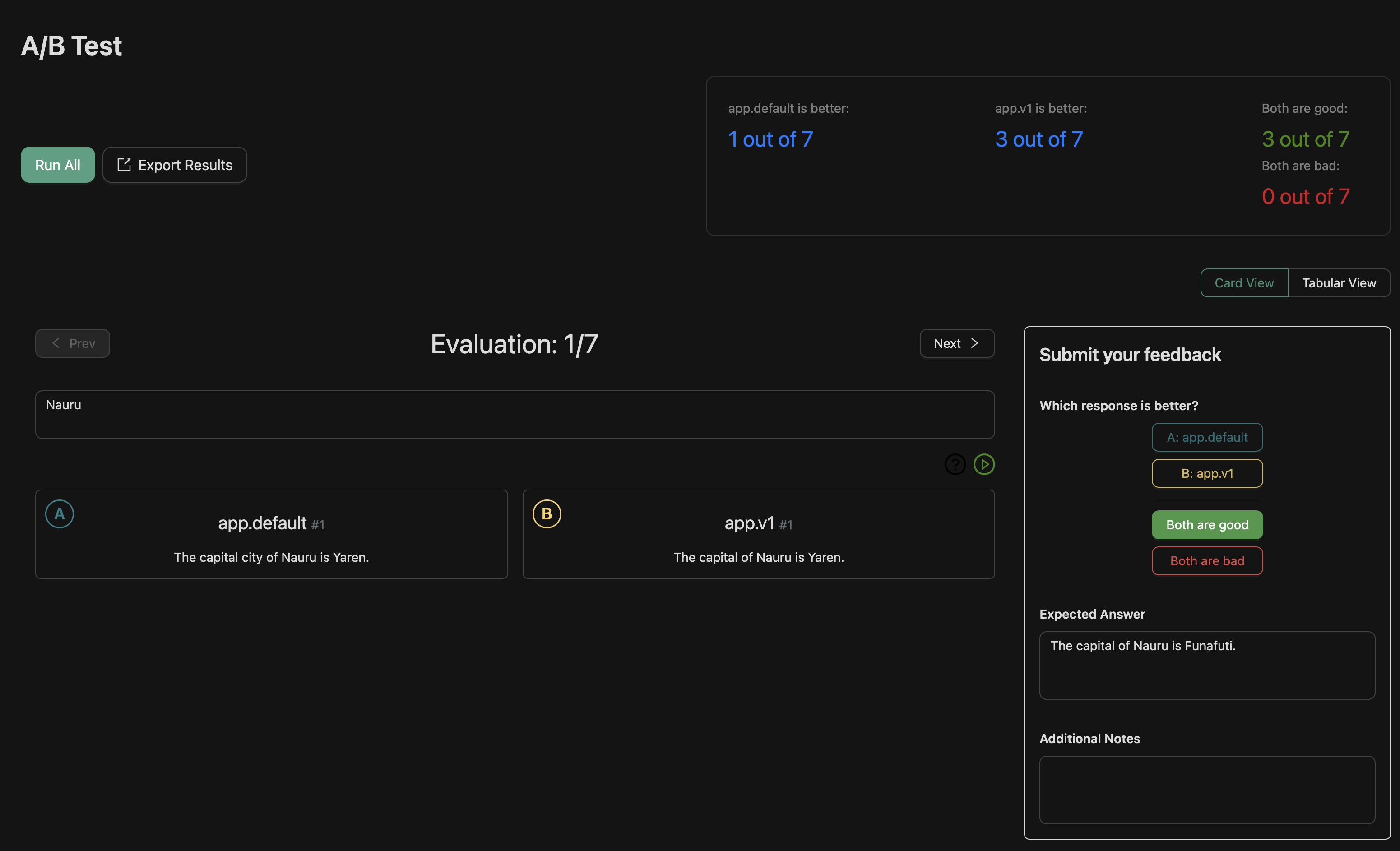

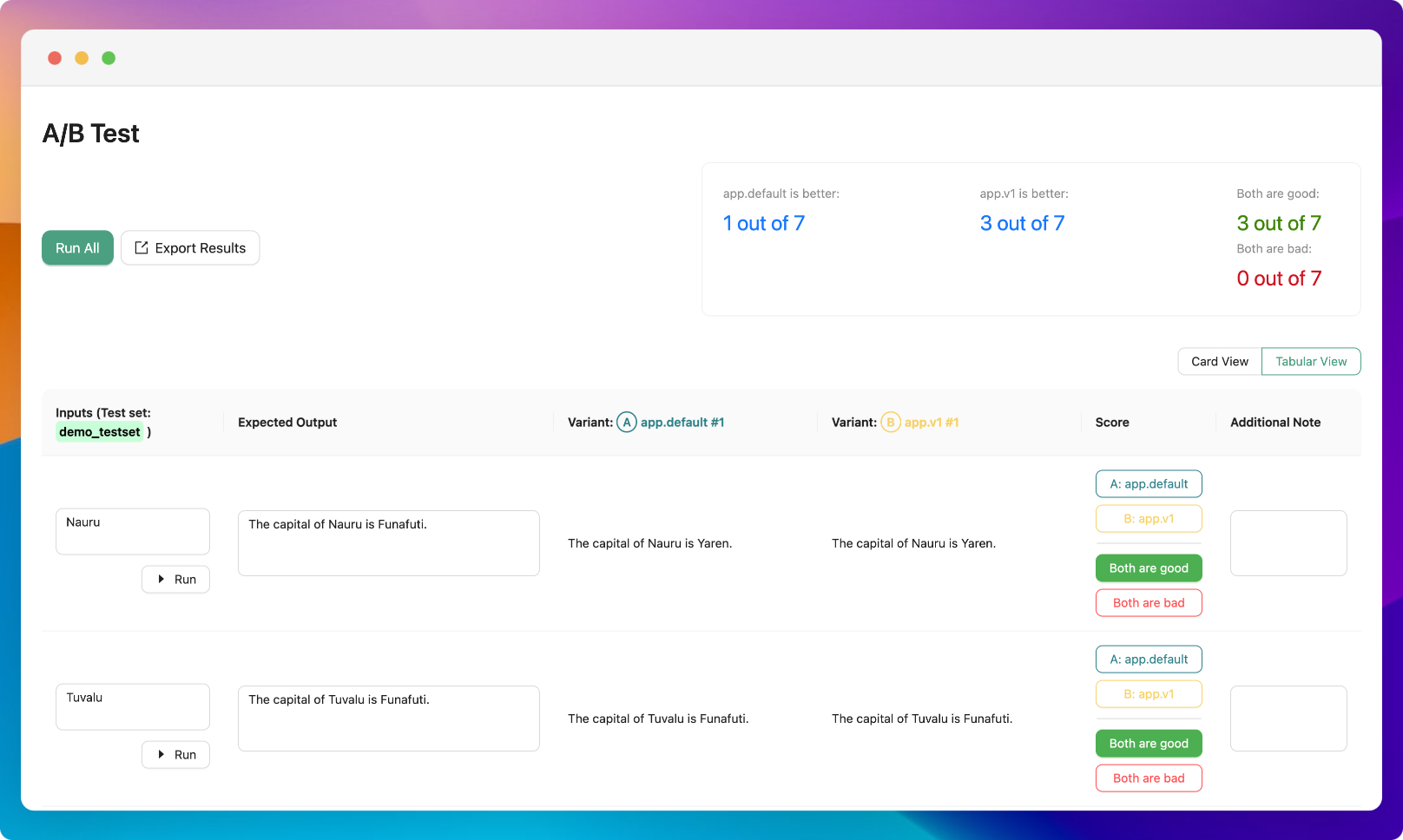

A/B Test

A/B tests allow you to compare the performance of two different variants manually. For each data point you can select which variant is better, or if they are equally good or bad.

To start a new evaluation with an A/B Test, follow these steps:

- Select two variants that you would like to evaluate.

- Choose the testset you want to use for the evaluation.

Invite Collaborators

In an A/B Test, you can invite members of your workspace to collaborate on the evaluation by sharing a link to the evaluation. For information on how to add members to your workspace, please refer to this guide.

Click on the "Start a new evaluation" button to begin the evaluation process. Once the evaluation is initiated, you will be directed to the A/B Test Evaluation view. Here, you can perform the following actions:

- Scoring between variants: Evaluate and score the performance of each variant for the expected output.

- Additional Notes: Add any relevant notes or comments to provide context or details about the evaluation.

- Export Results: Use the "Export Results" functionality to save and export the evaluation results.

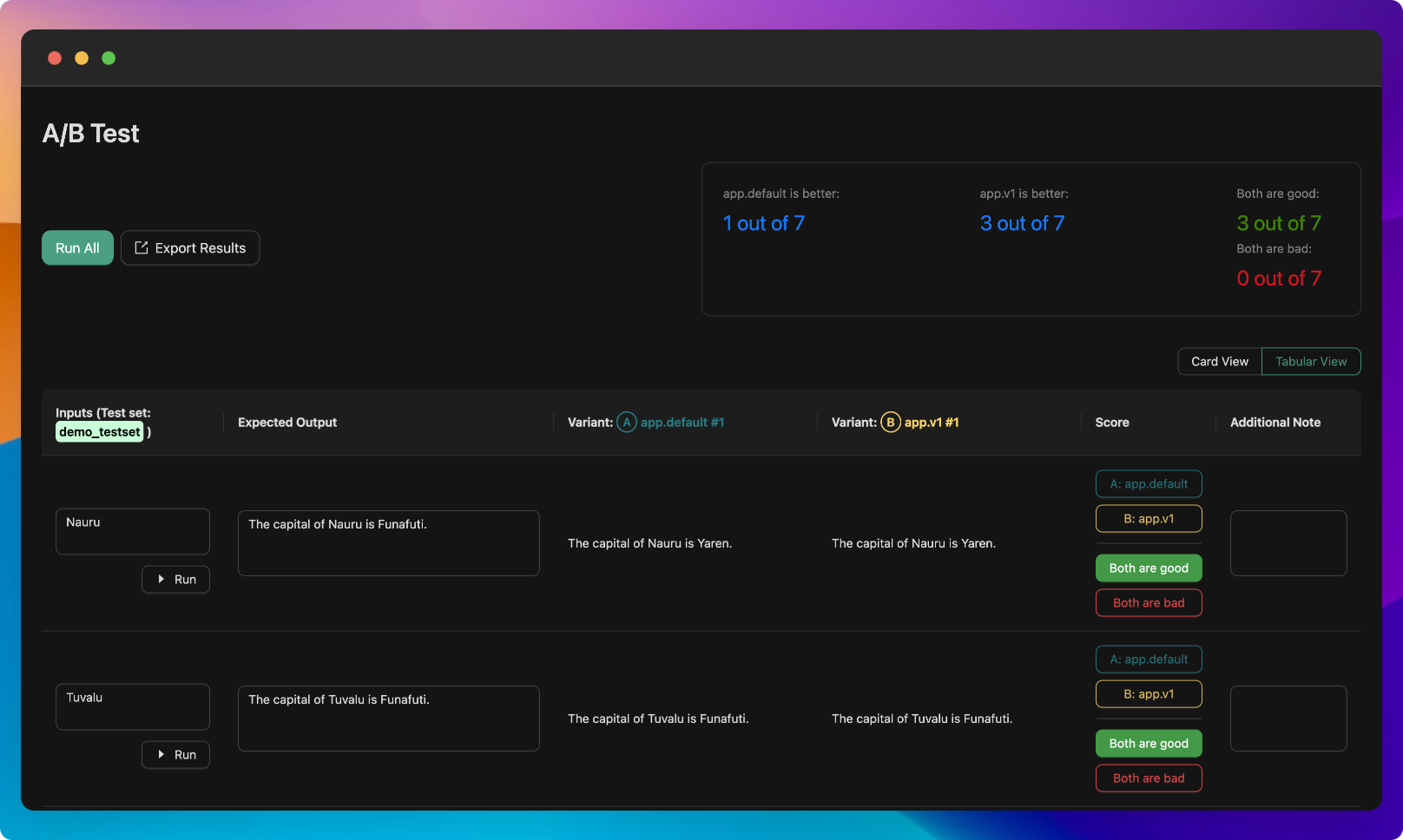

Toggle Evaluation View (Card/Table mode)

You can also switch to the table view for a different perspective in your evaluation.

A/B Test View

Single Model Test View